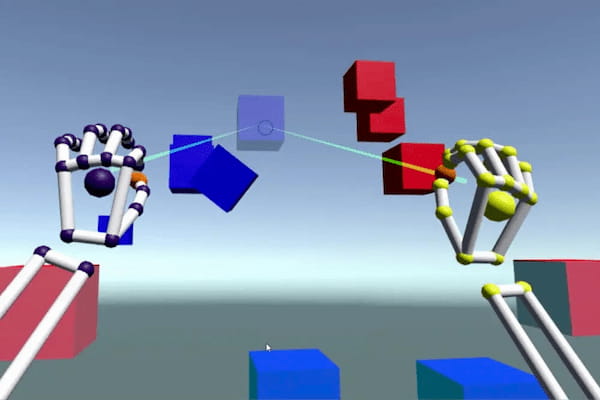

Gaze + Pinch in Action

The accommodating paper co-written with Ken Pfeuffer was published to the ACM Digital Library for the 5th ACM Symposium on Spatial User Interaction (SUI 2017).

You can find more publications of mine on Google Scholar. There is also a full talk about the Gaze+Pinch paper given by my colleague Ken Pfeuffer at SUI 2017.